I am a Machine Learning Engineer at TikTok, where I work on building Vision Language Models (VLMs) and their applications.

I did my master’s program in Data Science at Brown University. During my graduate study, I was fortunate to work with Prof. Stephen Bach on improving the out-of-domain generalization of Large Language Models (LLMs) in the BATS Lab, and Prof. Shekhar Pradhan and Prof. Ritambhara Singh on advancing the reasoning ability of VLMs in the Conversational AI Lab (CAIL).

Previously, in 2022, I received my bachelor’s degree with honors at Cho Kochen Honors College, Zhejiang University. I am proud to be a member of the Qiushi Pursuit Science Class at ZJU.

Email: echo @gmail.com | sed 's/^/jiayuzheng99/'

To learn more about me, please check my CV.

🔍 Current Research Interests

My research experience largely lies in Natural Language Processing (NLP) and Vision-Language Models (VLMs). Currently, I am doing research on improving language model performance by leveraging images as additional supervision signals at the Conversational AI Lab. My current research interests include three aspects:

- Efficient NLP&VLM: Improving the performance of LLM or VLM systems on out-of-domain (OOD) tasks, e.g. legal document classification for LLMs, and medical image classification for VLMs, in a parameter-efficient and data-efficient manner. In this direction, I have expertise in (1) PEFT tuning of LLMs and VLMs, developed transferable reasoning soft prompts to adapt Mistral-7b-instruct to downstream NLP tasks, LoRA-tuned Llama-2, Mistral, and Gemma on an OOD legal document classification task; (2) knowledge distillation of LLMs, trained smaller LLMs with synthetic data generated by larger ones, and implemented algorithms like Noisy Student Training; and (3) tuning-free LLM alignment, like controllable text generation and alignment of LLMs with In-Context Learning (ICL), e.g. Untuned LLMs with Restyled In-context ALignment (URIAL).

- Explainable Reasoning in LLMs&VLMs: I am interested in improving the reliability and factuality of LLMs chain-of-thought (CoT) reasoning ability with In-Context Learning. I would also want to understand LLM behaviors systematically and top-down, and explain them with studies of latent variables and neuron behaviors. I am also excited to improve VLMs’ compositional reasoning ability, e.g. attribute object binding and relational understanding with specialized variants of contrastive learning objectives. I developed Relation-CLIP with hard negative mining and relation-aware contrastive loss that can distinguish relationships between or orders of subjects. In the long term, I believe in the potential of multimodal learning to enhance both language modeling and computer vision.

🔥 News

- 2024.06: 🎉🎉 I’ll join TikTok as a Machine Learning Engineer.

- 2023.06: 🎉🎉 Research internship at Conversational AI Lab, Brown University.

- 2022.09: 🎉🎉 I’ll be joining Brown University as a data science MS student.

- 2022.06: 🎉🎉 Graduated from Zhejiang University as Outstanding Graduates (rank 2% among a cohort of 101 students).

📝 Publications and Projects

GourmAIt: Training Robust Deep Neural Networks on Noisy Datasets

Jiayu Zheng

- Final project for CS141 Computer Vision

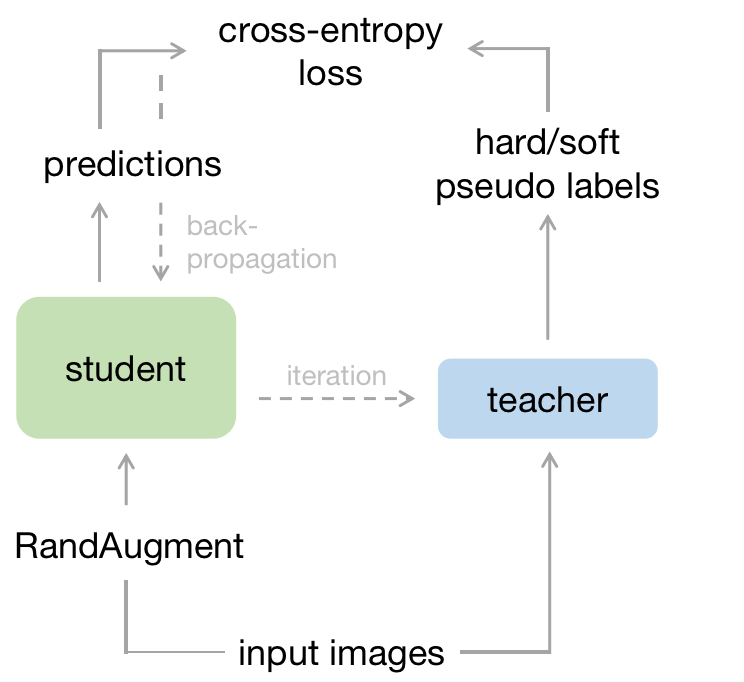

- Implemented Noisy Student Training, a self-supervised learning (SSL) algorithm published by Google Research, where pseudo labels generated by a teacher model are used to train a larger-or-equal-size student model, which becomes the teacher model in the next iteration

- Implemented ResNet with stochastic depth, step-wise unfreezing scheduling, and learning rate scheduling to maximize the performance gain in the fine-tuning phase

TransformerHub: A Collection of Transformer-Based Models

Jiayu Zheng

- Long-term project for self-training, adopted from my final project for CS247 Deep Learning

- Implemented various encoder-only, decoder-only, and encoder-decoder Transformer models including Transformer, BERT, GPT, ViT, and CLIP

- Implemented advanced features like prefix causal attention, sliding window attention, rotary position embedding, extrapolable position embedding

Cyber Barista: Coffee Bean Quality Prediction With Ensemble Learning

Jiayu Zheng

- Course project for DATA1030 Data Science

- Implemented an ensemble of nine models, including an ElasticNet, an XGBoost, a multi-layer perceptron (MLP), etc., to predict the quality score of coffee beans

- Interpreted the model output using feature-based methods: random perturbation, weight magnitude, and SHAP

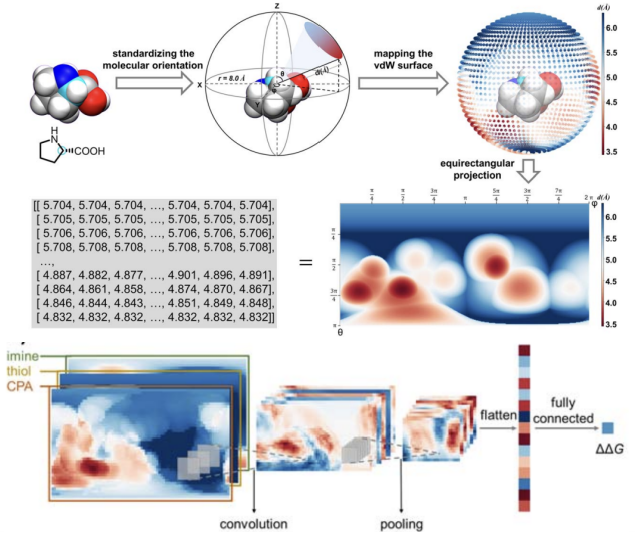

A Molecular Stereostructure Descriptor Based On Spherical Projection

Licheng Xu, Xin Li, Miaojiong Tang, Luotian Yuan, Jiayu Zheng, Shuoqing Zhang, Xin Hong

[project page] [paper]

- A numeric descriptor that converts the spatial, continuous van der Waals potential field of molecules to a sequence of 2D matrics

- Trained a Convolutional Neural Network (CNN) to predict the stereo-selectivity of chemical reactions

🎖 Honors and Awards

- 2022.06 Outstanding Graduates of Zhejiang University

- 2022.02 Zhejiang University Scholarship, First Prize (top 3%)

- 2021.12 Cho Kochen Honors College Second-Class Scholarship for Elite Students in Basic Sciences

- 2021.12 Cho Kochen Honors College Scholarship for Innovation (international contest award)

- 2021.01 Zhejiang University Scholarship, Third Prize (top 15%)

- 2020.01 Zhejiang University Scholarship, First Prize (top 3%)

- 2020.01 Outstanding Students.

📖 Educations

- 2022.09 - 2024.05, Sc.M. in Data Science, Data Science Institute, Brown University.

- 2018.09 - 2022.06, Sc.B. in Chemistry with Honors, Cho KoChen Honors College, Zhejiang University.

💬 Services

- 2024.01 - 2024.05, Graduate Teaching Assistant, CS1430 Computer Vision, Brown University.

- 2023.09 - 2023.12, Graduate Teaching Assistant, CS1410 Artificial Intelligence, Brown University.

- 2023.04 - 2023.04, Mentor, College-day Data Science Bootcamp, Brown University.

- 2023.03 - 2023.03, Mentor, Women in Data Science (WiDS), Brown University.

💻 Internships

- 2023.06 - 2023.09, Research Internship, Conversational AI Lab, Brown University.

🪂 Misc

I am a loyal fan of Ubisoft’s Assassin’s Creed franchise. Take a leap of faith to the unknown!

As a huge consumer of espresso-based coffees, I am dreaming of starting my coffee shop one day. My favorites are:

- Latte

- Flat White

- Cortado

- Macchiato

I do build deep learning models for fun in my leisure time 🦾. It is not a crap…